Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

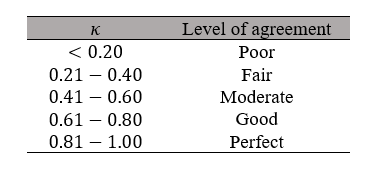

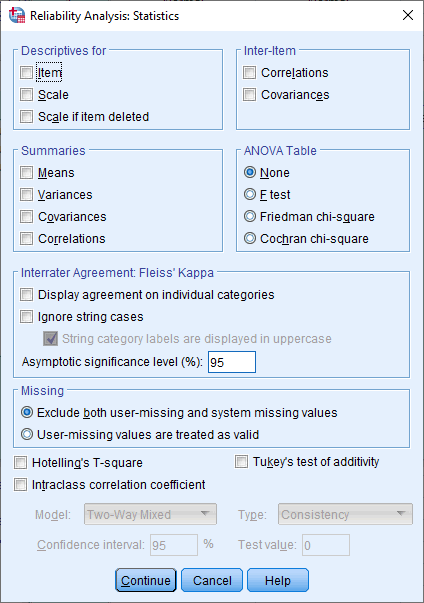

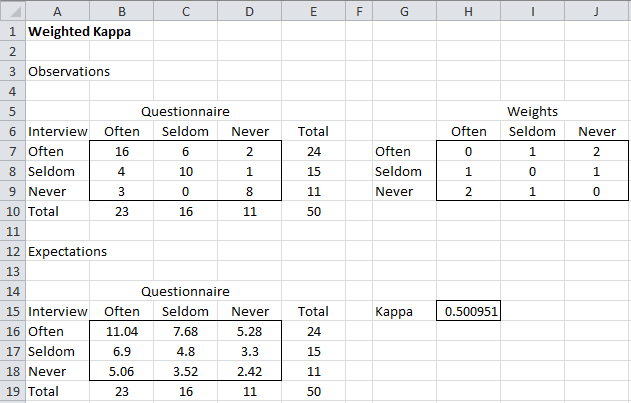

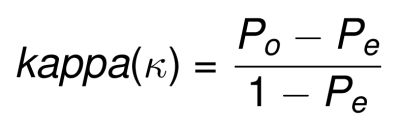

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

Cohen's Kappa and Fleiss' Kappa— How to Measure the Agreement Between Raters | by Audhi Aprilliant | Medium

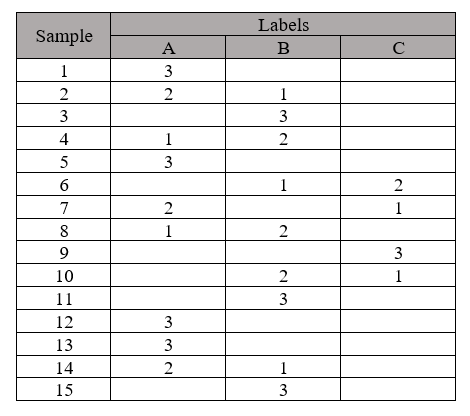

How can I calculate Cohen's Kappa inter-rater agreement coefficient between two raters when a category was not used by one of the raters?

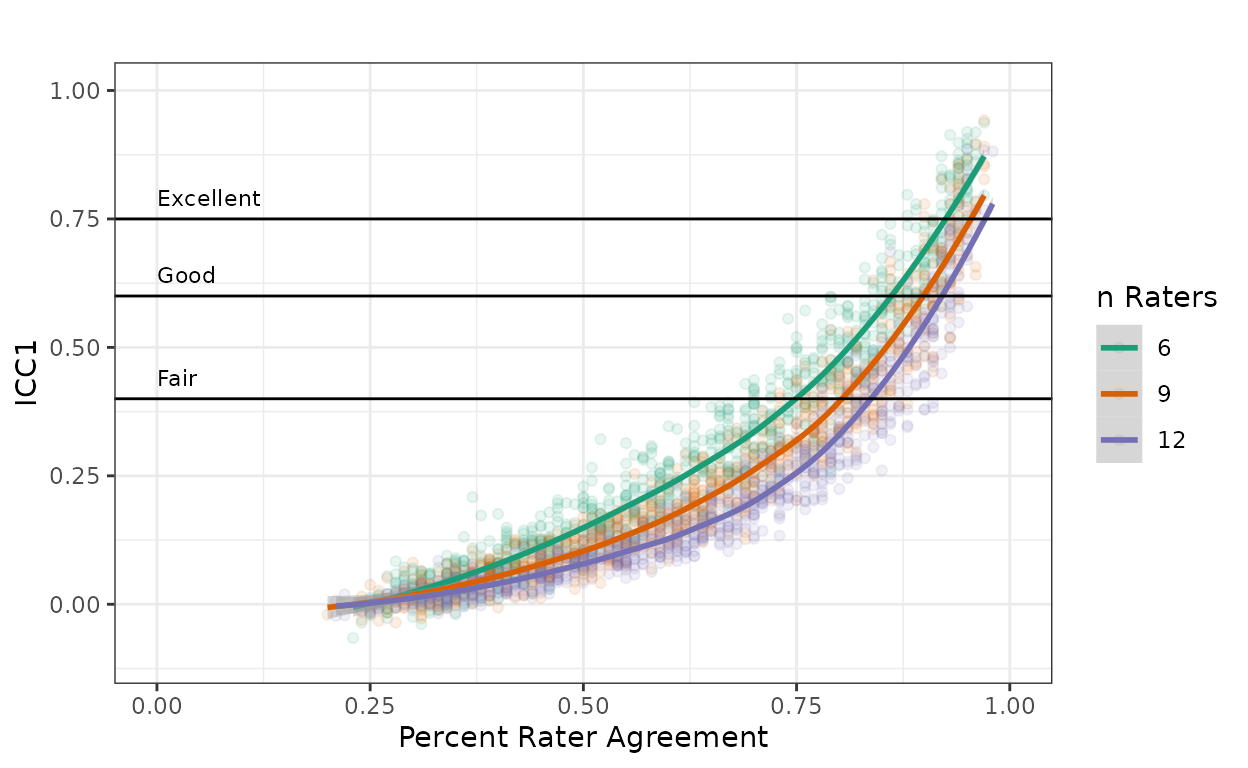

Power curve and specificity curves when k = 5 and N = 250 in the same... | Download Scientific Diagram

![Fleiss Kappa [Simply Explained] - YouTube Fleiss Kappa [Simply Explained] - YouTube](https://i.ytimg.com/vi/ga-bamq7Qcs/mqdefault.jpg)